We want more quality content for our websites, but it’s difficult to produce enough. So how can we scale the content creation process, especially for ecommerce sites with plenty of products?

If you were to pay for a copywriter to produce thousands of product snippets from scratch, you’d likely be out of pocket pretty quickly.

What if you pay for 1,000 new product descriptions, but only half of those products live one month later? Clearly, you need a faster, more cost-effective approach. This is where ChatGPT can help.

ChatGPT’s native web interface is really helpful and a great time-saver.

But if we have hundreds or thousands of product descriptions to create, there’s a more efficient way of using ChatGPT without copying and pasting prompts. Here’s how.

Mass production of content snippets: Scaling the output

If you have an ecommerce website, you might wish to produce product snippets using data from a product information management (PIM) system.

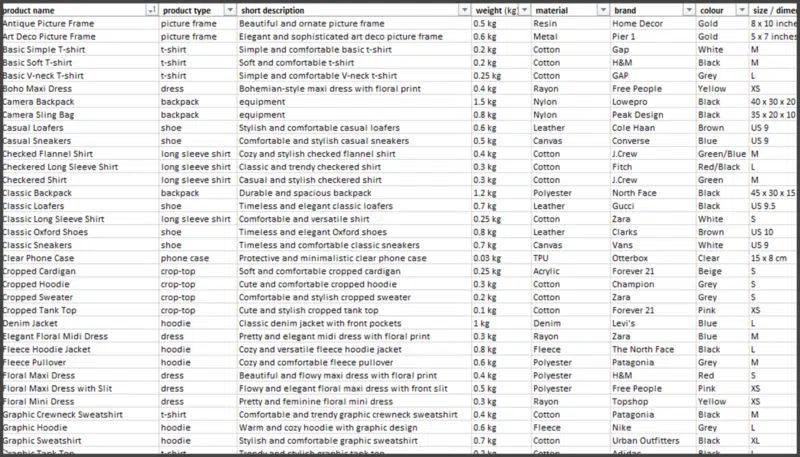

Let’s say you have the data on a spreadsheet.

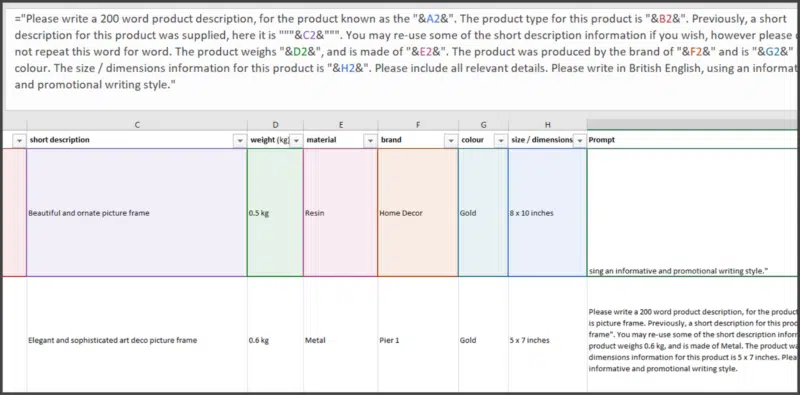

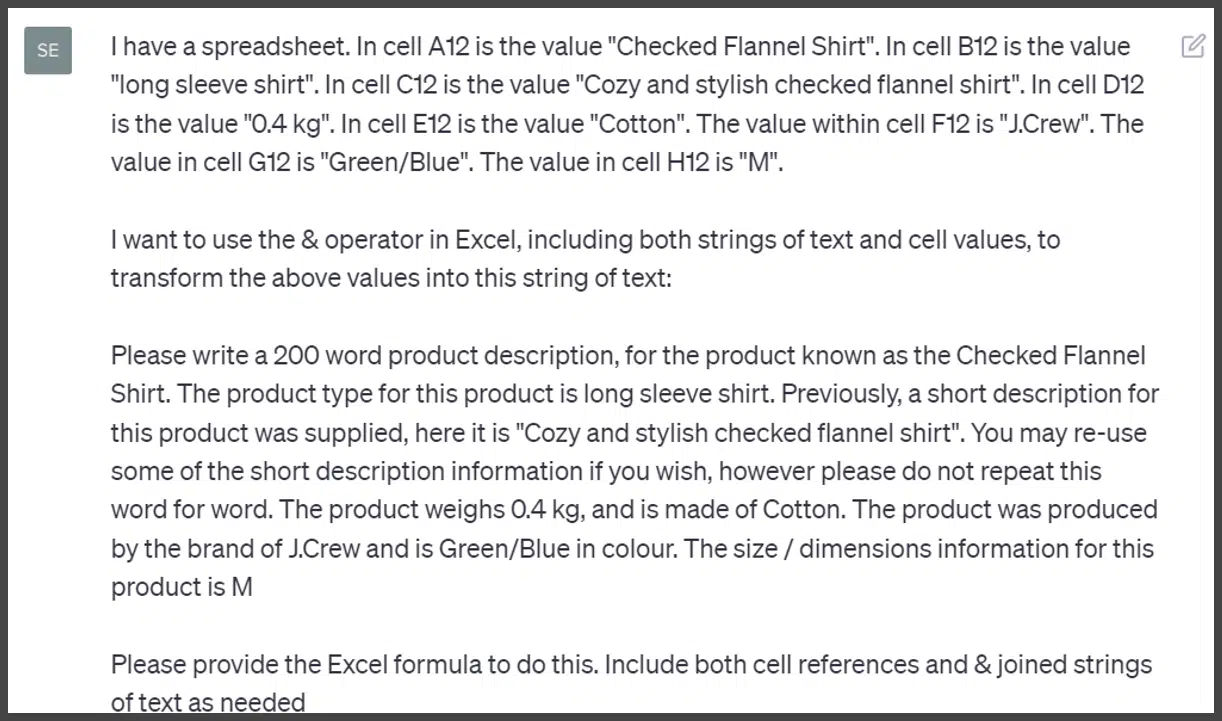

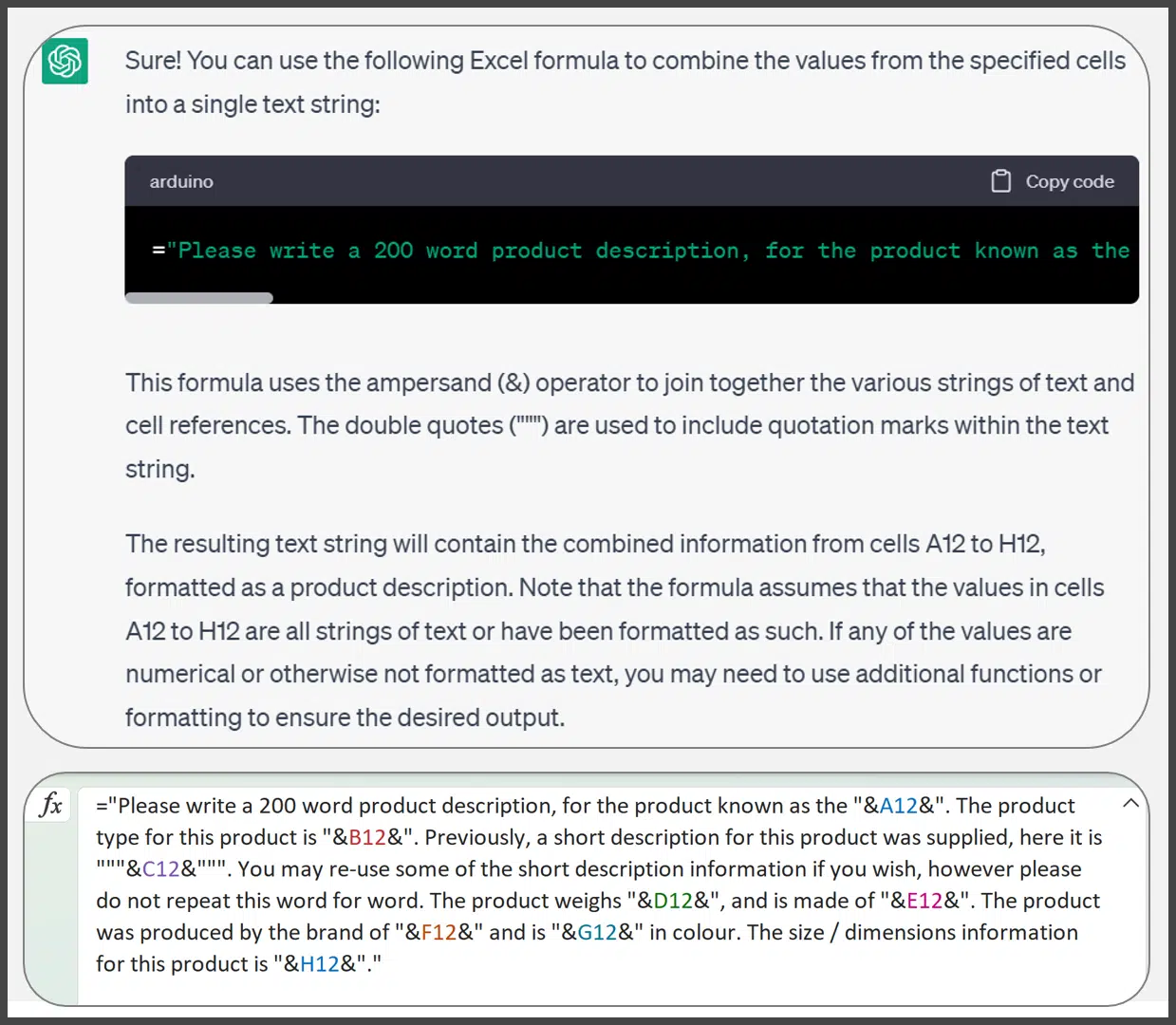

We can use Excel formulas to concatenate (or join, using the “&” operator) data into rich prompts, ready for ChatGPT. For example:

Note that your formula may require one or multiple “IF” statements. That’s because your data may have holes in some areas.

For example, some products may not have certain parameters (data within certain columns) specified. You need your formula to be flexible, and you can always ask ChatGPT to help you write the formula.

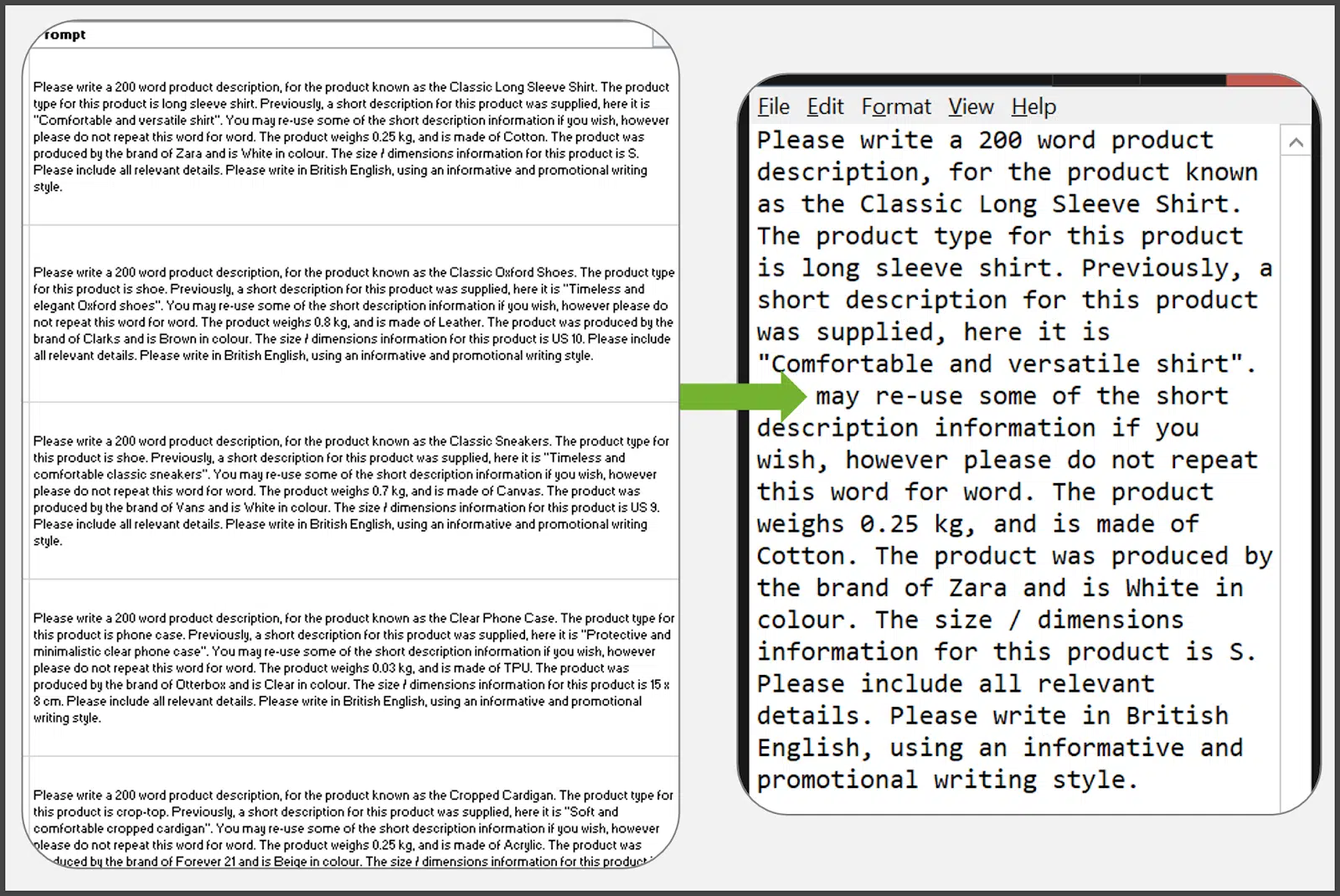

Once your formula is returning a prompt for each row (in this case, for each product), you can copy and paste a few of the generated prompts into a word processor, even notepad.

It’s good to spot-check a few to ensure the text makes sense, even when some data items were missing.

Once you have verified that your Excel (or Google Sheets) formula is generating the types of prompts that you want, you can send a few of them to ChatGPT (manually, using the web interface) to see if you like the results.

The generated snippet(s) will likely require human editorial oversight, though you want to get the AI to do as much of the work as possible. That’s why we engaged in such a deep “prompt-crafting” process.

Happy with your initial prompts and responses? Good, then it’s time to move on.

Get the daily newsletter search marketers rely on.

Fetching your new product content snippets from OpenAI

So, you now have a list of products (or other types of webpages) which you’d like to generate content for.

In this example, we’re going with a fictitious sample of 100 products. You now have a list of all your products (either separated by URL, SKU or some other unique identifier).

These products also have assigned rich prompts which you have generated. But ChatGPT’s web UI is limited. So how can you send these across all at once?

For this, you’re going to have to get comfortable with basic scripting and with handling API requests. You can create an OpenAI API account to access the ChatGPT web interface.

I put together a basic Python script for my agency. While I can’t share the script, I can review some of the processes and documentation needed.

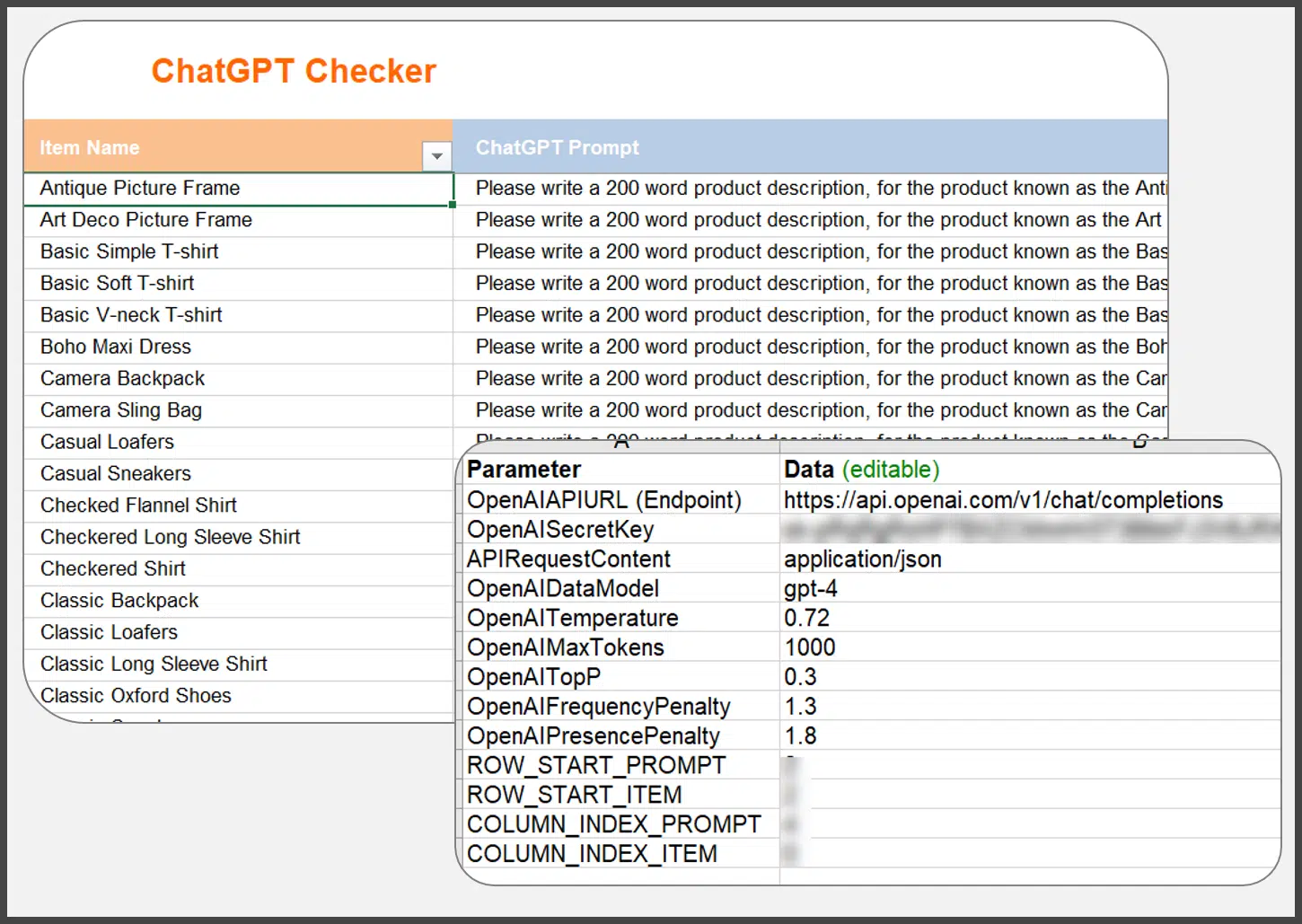

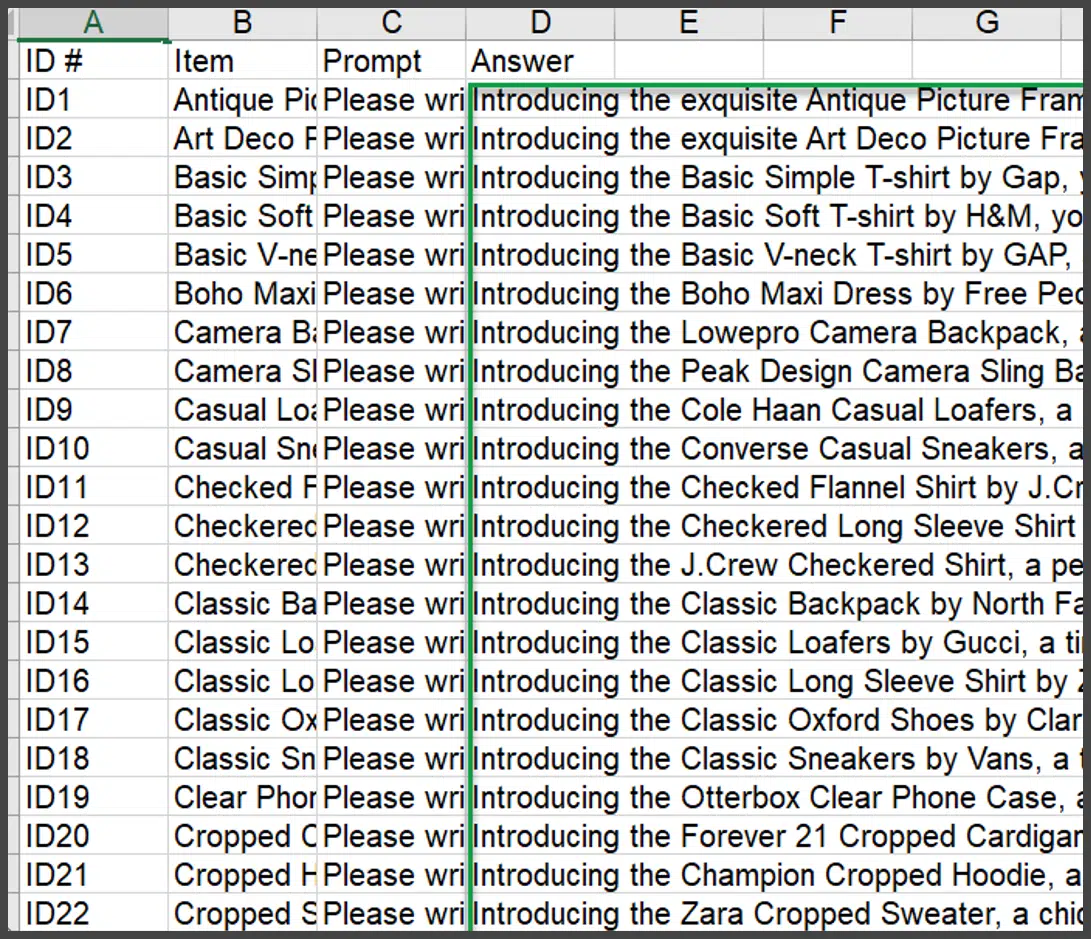

Should I wish to syndicate this script later, building it on marketing-accessible endpoints and technologies would be best. As such, I first produced an Excel sheet:

The sheet simply provides an area to dump items for processing (identified by some unique identifier in the “Item Name” column, in this case, product name). In addition, the prompts to be processed can also be placed here.

Another tab contains parameter settings for the request. (You can learn about all these via OpenAI’s documentation.)

Some of these settings fine-tune content creativity allowance, unusual wording deployment, max token spend per request and even content redundancy. This is also where the OpenAI API key is saved.

Once a certain button within the spreadsheet is clicked, the Python script launches automatically and handles the rest:

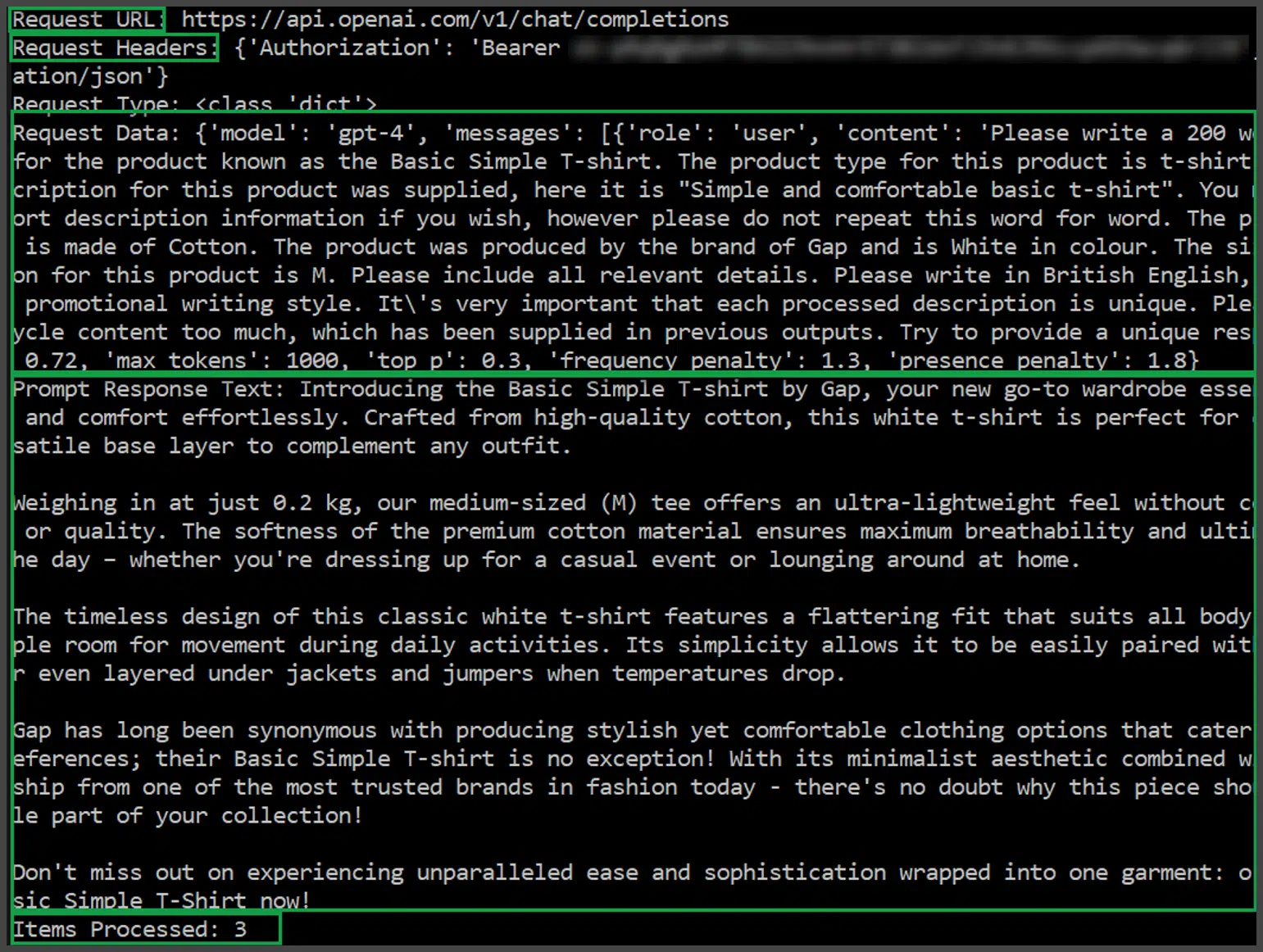

First, the script defines the request / endpoint URL. After this, the script sends the request headers and the request data.

Most parameters for the request header / data, can be tweaked within the spreadsheet pictured previously.

Finally, the response text is received from OpenAI and logged within the “data dump,” another separate spreadsheet.

I have three scripts for this deployment, though only one needs to run. I also have two separate spreadsheets, both of which are needed.

Once the script resolves all of the queries, all of the snippets of text are saved here:

Looking at the above output, you may have some content uniqueness concerns.

While all of the snippets begin with the exact phrase (“Introducing the [product name]”), the produced content gets more diverse across the generated paragraphs. So, it’s not as bad as it looks.

Also, there are things you can do to attempt to make each generated snippet even more unique, such as categorically asking the AI to generate unique content (though you have to be quite firm and repetitive in this regard to get anywhere).

You can also tweak the temperature and frequency parameters to adjust content creativity and avoid redundant language.

Weaving these technologies together (OpenAI’s API, Excel, Python), we can quickly ascertain generated text snippets for all input prompts.

From here, it’s up to you what you want to do with that newly processed data.

I highly recommend moving it into a format your editorial team can understand.

We have somewhat mitigated much of this by crafting very rich prompts. However, you can never be certain until you check the output.

ChatGPT output notes

Assuming that you’re happy to work with ChatGPT, there are a few things to keep in mind:

- Let’s talk about the cost. It’s tough to give a cost breakdown for using OpenAI’s GPT-4 model of ChatGPT via their API. It’s not just the input word count of the prompt or the output word count. Pricing revolves around the AI’s “thinking time.” More complex requests will use more tokens and cost more (even if the input / output word count is reduced).

- Our test batch of 100 prompts from sample data cost us only $1.74 to run and return. We generated 22,482 words of content overall. 22,482 words of content for $1.74 seems good, but there’s much more to consider.

- Due to AI’s propensity to infer, a human editorial process is still fundamentally required (in our opinion).

- However, using this technology does transform a costly from-scratch content creation task into a much more cost-effective content editing task.

- The data / AI specialist’s time for prompt crafting and running scripts must also be factored in.

- On top of inferring where data is lacking, AI can also “creatively infer” things. In our sample data set, the AI decided to infer the existence of a sizing guide (clothing) within the produced product content. If no sizing guide existed, that would look pretty silly.

- Always send AI content through a human editorial review process for fact-checking, accuracy and (most importantly) additional creative flair.

- You can further automate ChatGPT by plugging in projects like Auto-GPT. Those AI ‘agents’ add more active processing and tasking power to ChatGPT. However, projects like this still need your OpenAI API key. And due to their infancy, they can chew up a lot of credits before they learn to perform tasks to standard.

Scaling your content creation process with AI

AI can scalably produce diverse snippets of content that are fit for purpose with minimum intervention.

For long-form content, it’s probably still better to use the interface and iterate the AI’s responses.

Opinions expressed in this article are those of the guest author and not necessarily Search Engine Land. Staff authors are listed here.

Related stories

New on Search Engine Land