Google announced new applications of its MUM technology, including multimodal search with Google Lens, Related topics in videos and other new search result features, at its Search On event on Wednesday. While these announcements are not an overhaul of how Google Search works, they do provide users with new ways to search and give SEOs new visibility opportunities as well as SERP and search changes to adapt to.

What is MUM?

Google first previewed its Multitask Unified Model (MUM) at its I/O event in May. Similar to BERT, it’s built on a transformer architecture but is reportedly 1,000 times more powerful and capable of multitasking to connect information for users in new ways.

In its first public application in June, MUM identified 800 variations of COVID vaccine names across 50 languages in a matter of seconds. That application, however, did not show off the technology’s multimodal capabilities. The announcements made at Search On provide a better glimpse at MUM’s multimodal potential.

MUM enhancements to Google Lens

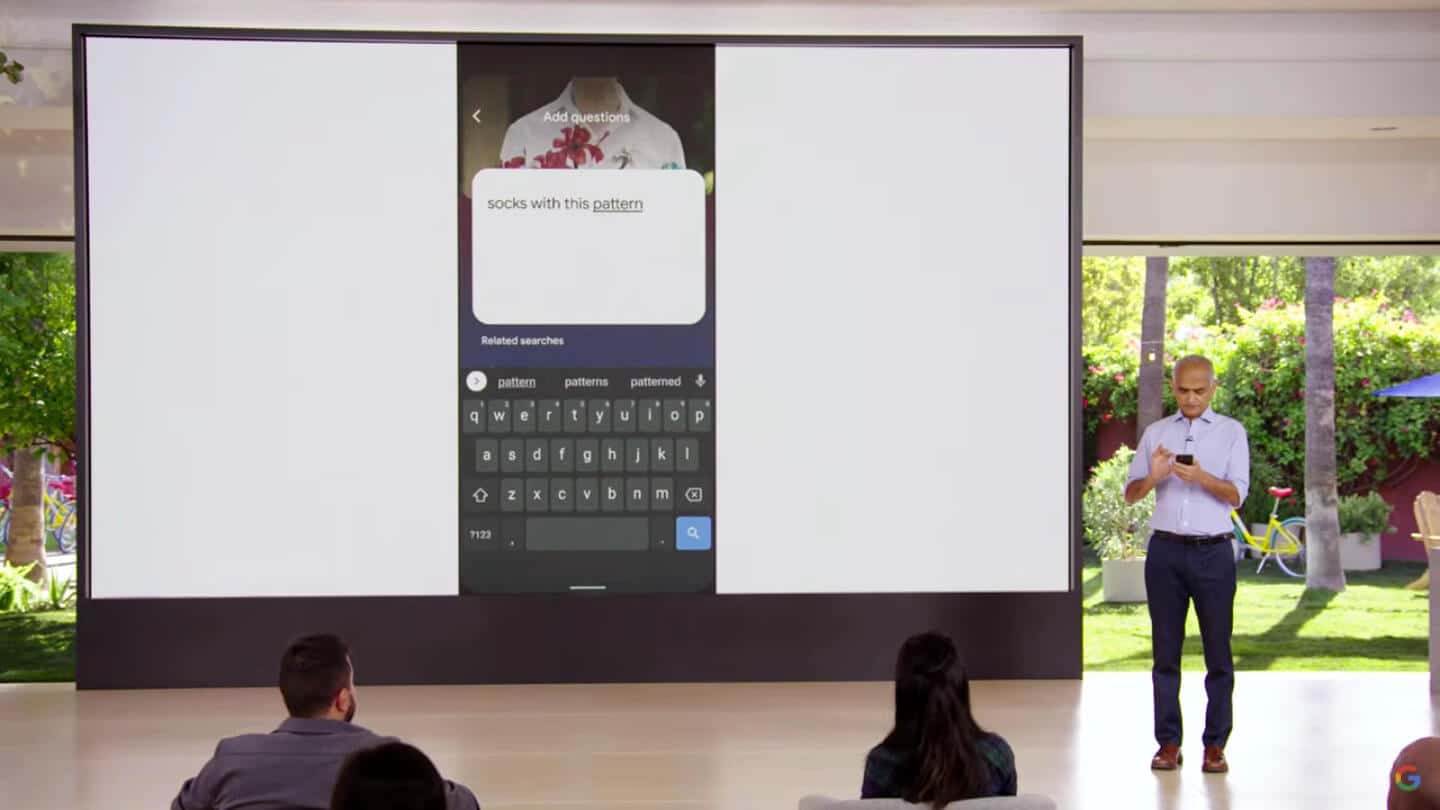

Google demoed a new way to search that combines MUM technology with Google Lens, enabling users to take a photo and add a query.

In the “point-and-ask” example above, a user takes a photo of an unknown bicycle part and asks how to fix it. Google is able to match the search to an exact moment in a video, which helps users find the right information without having to manually research bike parts and then conduct a separate search for a tutorial.

In its announcement, the company provided another potential use case (shown above): Users can take a picture of a pattern on a shirt and ask Google to find the same pattern on socks. While users could simply describe the pattern they’re looking for with text, they may not find the exact pattern or they may have to filter through many results before locating the right one. This capability will be arriving in early 2022, a Google spokesperson told Search Engine Land.

Related topics in videos

Google is also applying MUM to show related topics that aren’t explicitly mentioned in a video.

In the example above, the video does not explicitly say “macaroni penguin’s life story,” but Google’s systems are able to understand that the topics are related and suggest the query to the user. This functionality will be launching in English in the coming weeks, and the company will add more visual enhancements over the coming months. It will first be available for YouTube videos, but Google is also exploring ways to make this feature available for other videos.

Features that will eventually leverage MUM

Google also unveiled some new SERP features that are based on other technologies, but the company expects to improve them with MUM over the coming months.

“Things to know.” This feature lists various aspects of the topic the user searched for. Things to know can enable users to see the different dimensions other people typically search for, which may help them get to the information they’re looking for faster.

In the example above, Google shows aspects of the query (“acrylic painting”) that searchers are likely to look at first, like a step-by-step guide or acrylic painting using household items.

“The information that shows up in Things to know, such as featured snippets, is typically information that users would see by directly issuing a search for that subtopic,” a Google spokesperson said. This feature will also be launching in the coming months.

Refine and broaden searches. The “Refine this search” and “Broaden this search” features enable users to get more specific with a topic or zoom out to more general topics.

Continuing with the “acrylic painting” example from above, the Refine this search section shows suggestions for acrylic painting ideas, courses and so on, while the Broaden this search section shows related, but more general topics, like styles of painting. These features will also launch in English in the coming months.

More announcements from Search On

In addition to the MUM-related announcements above, Google also previewed a more “visually browsable” interface for certain search results pages, enhancements to its About this result box, a more “shoppable” experience for apparel-related queries, in-stock filters for local product searches, as well as the ability to make all images on a page searchable via Google Lens. You can learn more about those features in our concurrent coverage, “Google search gets larger images, enhances ‘About this result,’ gets more ‘shoppable’ and more.”

Why we care

When Google first unveiled MUM, it touted the technology’s multimodal capabilities and power with abstract examples and no rollout dates. Now, we have a better idea of what MUM can actually do and a roadmap of features to expect.

The enhancements to Google Lens are a new, and perhaps more intuitive, way to leverage multimodal search than the industry has seen before. The e-commerce example Google provided shows how this feature may help the search engine become more of a player in that sector while making it even more important for merchants to apply product schema and submit accurate data feeds so that their products can show up on Google.

The other MUM-related announcements (Related topics in videos, Things to know, Refine this search and Broaden this search) are all about enabling users to learn more through related topics. These features may present SEOs with the opportunity to get in front of users by connecting a search to another related search or a video to another related video or search that they’re ranking for.

The interconnectivity of Google’s search results and features may offer new ways for users to arrive at whatever they’re seeking. If Google discloses how someone arrives on a publisher’s content (such as through Refine this search suggestions, for example), this could reveal new user journeys (and the business opportunities that may come with them) to optimize for. These features are also another step further away from the ten blue links of old, and SEOs will have to adapt to the changes while making the most out of the new visibility opportunities these features have to offer.

In addition, these announcements, along with the other Search On announcements (visual browsing with larger images and more shoppable search features) may provide users with new and more intuitive ways to search, which can help the company maintain its position as the market leader.

New on Search Engine Land