Sundar Pichai, CEO of Google’s parent company Alphabet, previewed a new conversational model called LaMDA, or “Language Model for Dialogue Applications,” at the company’s I/O event on Tuesday. The new language model is designed to carry on an open-ended conversation with a human user without repeating information. LaMDA is still in early-phase research, with no rollout dates announced.

How it’s different from other models. LaMDA is a transformer-based model, like BERT and MUM, which Google also showcased at I/O. Similar to those two, it can be trained to read words, understand the relationship between words in a sentence and predict what word might come next.

What differentiates LaMDA is that it was trained on dialogue, and Google has put an emphasis on training it to produce sensible and specific responses, instead of more generic replies like “that’s nice,” or “I don’t know,” which may still appropriate albeit less satisfying for users.

“Sensibleness and specificity aren’t the only qualities we’re looking for in models like LaMDA,” Google said in its blog post, “We’re also exploring dimensions like ‘interestingness,’ by assessing whether responses are insightful, unexpected or witty.” In addition, the company also wants LaMDA to produce factually accurate responses.

Ethics and privacy are priorities, Google says. Models trained on datasets from the internet can contain bias, which may result in them mirroring hate speech or spitting out misleading information. “We have focused on ensuring LaMDA meets our incredibly high standards on fairness, accuracy, safety and privacy,” Pichai said on stage at Google I/O, “From concept all the way to design, we are making sure it is developed consistent with our AI principles.”

The ethical and privacy concerns that may arise when LaMDA rolls out will depend the extent of its capabilities and how it will be integrated into existing Google products. Since those details have yet to be fully revealed, we’ll have to wait and see how Google addresses any potential issues. This may be an especially important element for this product (or any product or feature that could potentially violate a user’s privacy) given the recent controversy surrounding Google’s FLoC initiative.

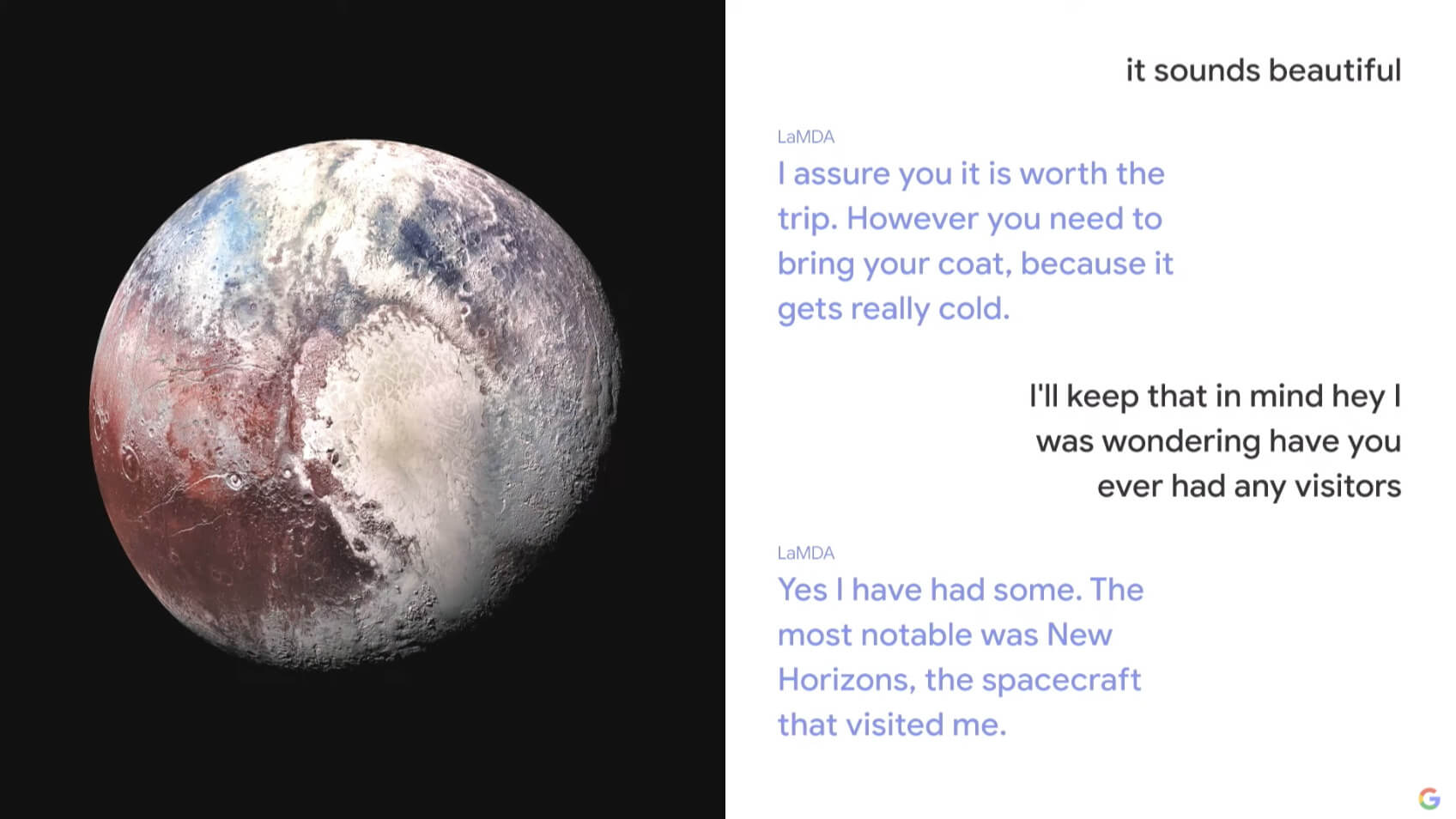

Potential applications. At I/O, LaMDA was shown off personifying the planet Pluto and a paper airplane, respectively. The conversations were Q&A-style between the user and LaMDA, but LaMDA went above and beyond providing direct, Google Assistant-like answers; instead, it offered nuanced responses that some might even consider witty.

Pichai also mentioned Google’s focus on developing multi-modal models that can understand information across text, images, audio and video. He alluded to potential LaMDA applications like asking Google to “find a route with beautiful mountain views,” or using it to search for an exact point within a video. “We look forward to incorporating better conversational features into products like Google Assistant, Search and Workspace,” he said.

Google has not offered any other details on how it might include LaMDA in any of its other products, or how it might be incorporated. Depending on how sophisticated the model is, one could imagine LaMDA helping users find the products they’re looking for or sift through local business reviews, for example.

Why we care. Conversational dialogue between users and Google may enable them to search for information or products in ways that are currently impossible. If it works and is widely adopted (and that’s a big “if” at this point), we may see a shift in search behavior, which may mean businesses have to adapt to ensure their content or products are still discoverable.

If Google incorporates it into existing products, which it almost certainly will, those products may become more useful for more users. That might give Google an important edge over its competitors and strengthen its own ecosystem unless those competitors are also able to deliver similar functionality.